How do we hold our devices?

The following is a partial repost from a very interesting article of UXmatters . This is the part which explains how we are holding our devices…

Why Should People Use Phones with One Hand?

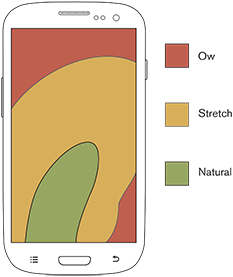

Josh Clark’s one handed–reach charts have been popular ever since they came out, because they gave a voice to the assumption that everyone holds their phone with one hand. Supposedly, this is primarily because the iPhone’s 3.5-inch screen is the perfect size for one-handed use—with the implication that other sizes are not. The idea, shown in Figure 2, is that people can comfortably touch anything within the arc of the area that the thumb can naturally reach, but as users stretch to reach other parts of the screen, it causes them discomfort.

Figure 2—Classic, but flawed representation of the comfort of a person’s one-handed reach on a smartphone

But even ignoring the fact that people do much or most tapping with more than one hand on their phone, this assumption about reach seems flawed. We don’t have stretchy fingers, so reaching further doesn’t hurt, it simply becomes impossible! There are limits to what people can reach. I have repeatedly observed people adjusting the way they’re holding their phone so they can interact with the rest of it.

That sales data that I mentioned earlier indicates that people don’t seem to mind this. People keep buying large devices—and not all of them are people with large hands. In my research, a lot of participants use a second hand for tapping. No one complains about this—even when I test dedicated iPhone users by giving them larger Android phones—they just adapt.

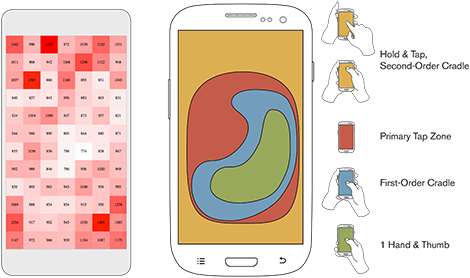

People Tap in Multiple Ways

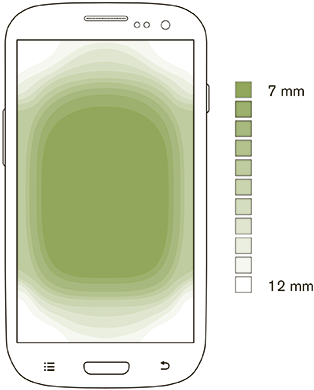

One nice thing about doing your own research is that you have this giant pile of data that you can refer back to whenever something sounds worth investigating. So, I decided to sift through some of my data on how people were holding their devices and replotted the charted positions of taps for a 5.1-inch phone—a small phablet. In a very recent article, Mikkel Schmidt posed a similar question about the one-handed use of mobile phones and performed his own study of tap times on an iPhone 5S—a 4-inch phone—held with only one hand.

Both of our results demonstrate similar findings, as you can see in Figure 3. Our research on touching a mobile device’s screen—including the speed of using the thumb to touch the screen on the left and people’s preference for tapping on the right—shows that, while the center of the screen is still the easiest part to tap, people can easily shift their hold to touch anywhere on the screen.

Figure 3—The center is easiest to tap, but people can shift their hands to touch anywhere.

As I’ve observed before, users are most accurate and faster when touching the central area of the screen. This data bears out even when grasp and reach are restricted to one-handed use. But, when people can choose their grip, those grasping with one hand and tapping with that thumb typically use a fairly small area of the screen in this way. Extending beyond that area seems to present no particular burden—most users simply slow down and add a hand to stabilize the device, allowing more reach for the thumb and enabling them to get to the entire primary tapping area.

According to my observations, interactions outside this central area almost always involve the use of two hands, with users either cradling the device and moving the tapping hand to reach something or simply holding the device in one hand and tapping it with the index finger of their other hand.

So, instead of thinking that reaching various zones of a mobile phone’s screen becomes increasingly burdensome to the user, we should understand that people can easily reach any part of the screen. If they’re not comfortable with the way they’re currently holding the device, they’ll simply change their grip to one that works better for them.

Three-Dimensional Hands on Flat Screens

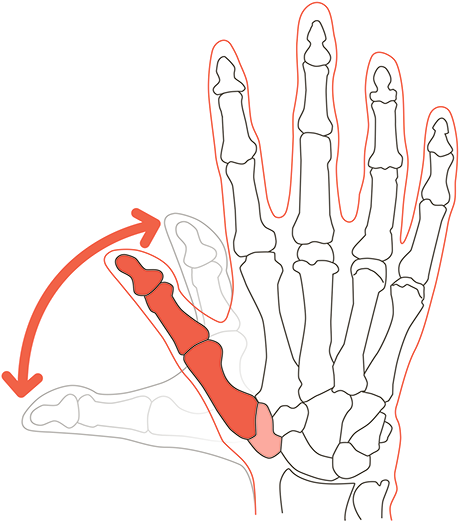

While giving you a full understanding of the biomechanics of the hand would be interesting, it’s too lengthy to go into here. Basically, the thumb moves in a sweeping range—of extension and flexion—not from the point where it connects with the rest of your hand, but at the carpometacarpal joint way down by your wrist. The other joints on your thumb let it bend toward the screen, but provide no extra sweep motion. Bending is important because, while the free range of the thumb’s movement is in three-dimensional space, touchscreens are flat, so a limited part of the thumb’s range of movement gets mapped onto the phone’s screen.

We often forget that, because people live in the real world, their interactions with devices occur in the layer where we convert reality into flat representations of input and output. Physiologically, your thumb includes the bones that extend all the way down to your wrist, as shown in Figure 4. Plus, the thumb’s joints, tendons, and muscles interact with your other digits—especially the position of the index finger. If your fingers are grasping a handset, there is a more limited range of motion available to the thumb. But moving your fingers lets you change the area your thumb can reach.

Figure 4—The thumb’s bones extend all the way down to your wrist.

The thumb is your hand’s strongest digit, so using the thumb to tap means holding the handset with your weaker fingers. Your fingers’ limited range of motion and reduced strength seems to result in the use of a second hand for cradling the device. Plus, in any case where users may encounter or expect jostling or vibration, they tend to cradle the device—using one hand just to hold it and securing the device with their non-tapping thumb. Recent research by Alexander Ng et al. has explored the effect of trying to carry other objects while walking and using the phone. One-handed use causes a particularly high reduction in accuracy in this situation.

There has also been some small-scale research that explores our understanding of physiology in relation to modern touchscreens and models that predict our interactions with them, but it’s still quite early days for these. I have some concerns that they are not yet applicable to our design work because they do not correlate well to people’s observed behavior. But I have hopes that useful models will eventually emerge, and we’ll be able to refer to preferred and effective tap areas for each device and population type.

People Switch, a Lot

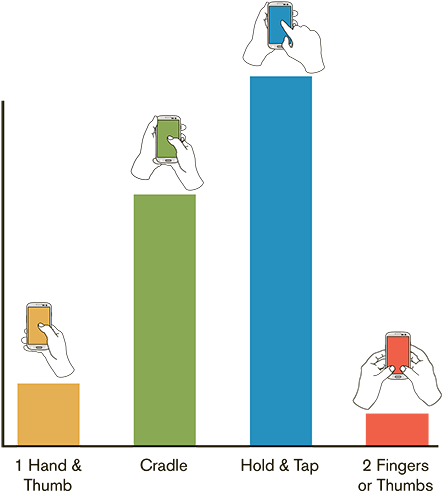

Don’t forget that these charts are all about right-handed use, which by no means covers all mobile-phone use. According to my observations, about 11% of taps are with a finger or thumb of the left hand. Note how I wrote that. This is not about right-handed people, but right-handed use.

The assumption that most mobile-phone use is with one hand is false—even more so for larger phones, or phablets. In my research, fewer than half of all participants used one hand throughout a test session, with the rest switching regularly between their hands. People use their non-dominant hand, and they frequently switch hands, as well as the way they’re gripping the phone, as shown in Figure 5. Touch is contextual, so assume that people will switch hands.

Figure 5—Switching hands

The average number of times that people switched hands in my tests was more than two, and across 15 inputs, some switched up to ten times. There’s not any obvious correlation between the hand a person uses, the location or type of touch target, or the type of interaction—for example, when a user opens or selects items. Sometimes people give focus to a field using one hand, then switch to the other hand to scroll or select an item.

I also want to remind people who, after looking at these numbers, insist that they and all of their friends hold their phone with one hand—it’s true, we usually hold our phone with one hand while looking at it or carrying it around. But when it comes to interacting with our phones, most of us use both hands. Based on my numerous observations, over half of interactions are not purely with one hand—even on small phones.

While the incidence of using one hand for holding and touching phablets was lower, as expected, even on phablets it is not zero. Close to 10% of interactions with phablets were with one hand and its thumb. About half of the participants who I observed waiting, talking, or reading used a different idle grip—usually one handed—then changed to another hold when actually interacting with the phone.

Reachability and One-Handed Operation

Apple has built in a feature to help accommodate one-handed users. Double-tapping—rather than tapping—the Home key causes the top of a page to scroll down for easier one-handed reach. Samsung has an even more radical feature for their Note series, shrinking the contents of the entire screen to an area simulating a 4-inch screen in the lower-right corner. Plus, after-market products such as keyboards are available along the same lines.

I have no reason to believe that most users will care about any of these features. Why? Largely because each of these is yet another invisible function that does something unnatural, so has to be learned. I expect that people will instead simply keep moving their hands around to get to the right part of the screen. We have to accept that people have bought large phones because they want large screens and design for them with the assumption that users’ are comfortable interacting with them.

Designing for Large Phones

So how should you design for these phablets? The same way you design for phones. Put key content and controls in the middle of the screen, and place only secondary controls along the edges. As I and others have discovered, people are more accurate and faster when touching the center of the screen so, when touching targets along the edges of the screen, they require larger targets and more spacing between them—especially at the top and bottom edges of the screen.

Figure 6—People are most accurate when touching the center of the screen.

You might ask—and people have—why not follow this data to the obvious extreme and put all taps in the central area where we know people are accurate and can reach targets with one hand? Well, in part, because that central zone varies so much according to a device’s size and a user’s grasp and handedness that it is challenging to define it. But, in reality, we’re already doing this for the most part. If your designs place the primary viewing, tapping, and scrolling areas in the middle of the viewport and place only secondary or rarely used options at the edges or corners of the screen, you are on right path to designing for the way people use their phones.

Despite the already-known differences in touch accuracy by zone, people do not seem to be inconvenienced or significantly slowed by shifting their grip to reach the various parts of a screen. As far as I can tell, there really is no downside to using larger handsets or placing controls outside the primary thumb-tapping zone.

Although touch targets at the periphery of the screen must be larger to ensure that people can tap them accurately and people will slow down a bit when tapping them, we can solve these issues during design—by choosing the proper items to place in the corners and making them large enough. For phablets and small tablets, I design user interfaces and lay out screens using the same guidelines and patterns that I use when designing touchscreen phones.

It is always good to remember that—no matter what the new device, user interface, or service—we still design for people. Understand how people work first—how they see, think, feel, and touch. Those patterns never change and provide useful guidance when designing new user experiences.![]()

References

Bergstrom-Lehtovirta, Joanna, and Antti Oulasvirta. “Modeling the Functional Area of the Thumb on Mobile Touchscreen Surfaces.” Proceedings of the 32nd Annual ACM Conference on Human Factors in Computing Systems (CHI ’14). New York: ACM, May 2014.

Callender, Mark N., “A Conceptual Design of a General Aviation Hands-on-Throttle and Stick (HOTAS) System.” University of Tennessee, 2003. Retrieved October 14, 2014.

Federal Aviation Administration. The Human Factors Design Standard (HFDS). (PDF) Federal Aviation Administration: Washington, DC, updated in 2012. Retrieved October 14, 2014.

Hoober, Steven. “Insights on Switching, Centering, and Gestures for Touchscreens.” UXmatters, September 2, 2014. Retrieved October 14, 2014.

—— “Design for Fingers and Thumbs Instead of Touch.” UXmatters, November 11, 2013. Retrieved August 18, 2014.

—— “How Do Users Really Hold Mobile Devices?” UXmatters, February 18, 2013. Retrieved August 18, 2014.

—— “Common Misconceptions About Touch.” UXmatters, March 18, 2013. Retrieved August 18, 2014.

Hoober, Steven, and Patti Shank. “Making mLearning Usable: How We Use Mobile Devices.” The eLearning Guild, April, 2014.

Hurff, Scott. “How to Design for Thumbs in the Era of Huge Screens.” Quartz, September 19, 2014. Retrieved October 14, 2014.

Ng, Alexander, Stephen A. Brewster, and John H. Williamson, “Investigating the Effects of Encumbrance on One- and Two-Handed Interactions with Mobile Devices.” Proceedings of the 32nd Annual ACM Conference on Human Factors in Computing Systems (CHI ’14). New York: ACM, May 2014.

Oremus, Will. “The One Big Problem with the Enormous New iPhone.” Slate, September 19, 2014. Retrieved October 14, 2014.

Schmidt, Mikkel. “Mobile UI Ergonomics: How Hard Is It Really to Tap Different Areas of Your Phone Interface?” Medium, October 17, 2014. Retrieved October 18, 2014.

– See more at: http://www.uxmatters.com/mt/archives/2014/11/the-rise-of-the-phablet-designing-for-larger-phones.php#sthash.03F6QC2s.dpuf